1. Introduction

American options allow the holder to exercise at any time up to expiration, making their pricing significantly more challenging than that of European options. The Least Squares Monte Carlo (LSMC) method, popularized by Longstaff and Schwartz (2001), is a powerful numerical approach that combines:

- Monte Carlo path simulation under the risk-neutral measure.

- Backward induction to handle early-exercise decisions.

- Regression to approximate the conditional continuation value.

This blog post provides a mathematically rigorous yet practically oriented overview of LSMC, focusing on the key equations, tight derivations, and essential C++ snippets (without large code dumps). We will also briefly discuss control variates and finite-difference Greeks.

2. Mathematical Foundations

2.1 American Option Pricing via Backward Induction

Consider an American option with payoff function

\[ \text{Payoff}(S_t) = \begin{cases} \max(S_t - K, \, 0), & \text{(call)} \\ \max(K - S_t, \, 0), & \text{(put)} \end{cases} \]

Let the option’s maturity be \(T\), and partition \([0, T]\) into \(M\) discrete steps of size \(\Delta t = \frac{T}{M}\). At each time step \(t\), the holder chooses between immediate exercise and continuation:

\[

V_t(S_t) = \max \Big(\text{Payoff}(S_t), \; e^{-(r - q)\Delta t} \, \mathbb{E}\big[V_{t+1}(S_{t+1}) \mid S_t\big]\Big)

\]

where

- \(r\) is the risk-free rate,

- \(q\) is the dividend yield,

- \(\mathbb{E}[\cdot]\) is taken under the risk-neutral measure.

Key Insight: Directly computing the conditional expectation \(\mathbb{E}[V_{t+1} \mid S_t]\) is difficult. LSMC replaces it with a regression on simulated paths.

2.2 Least Squares Approximation

We simulate \(N\) paths \(\{S_t^{(i)}\}_{t=0}^{M}\) of the underlying asset under geometric Brownian motion or another appropriate model. At each discrete step \(t \!<\! M\), for the paths that are in the money (ITM), we regress the discounted future payoff on a set of basis functions \(\{\phi_j(x)\}_{j=0}^{p}\). For example, polynomial bases:

\[ \mathbb{E}[\,V_{t+1} \mid S_t = x\,] \;\approx\; \sum_{j=0}^p \beta_j\, \phi_j(x) \]

Then we compare the predicted continuation \(\widehat{C}_t(x)\) to the immediate exercise payoff. If the latter is higher, we mark the path as exercising; otherwise, it continues.

3. LSMC Backward Induction Step by Step

- Simulate Paths:

Generate \(\{S_0^{(i)}, \ldots, S_M^{(i)}\}\) under the risk-neutral process (e.g., GBM with drift \((r - q - \tfrac{1}{2} \sigma^2)\), \(\Delta t (r - q - \tfrac{1}{2} \sigma^2)\) and volatility \(\sigma \sqrt{\Delta t}\) ). - Initialization at t=M:

\[

V_M^{(i)} \;=\; \text{Payoff}\bigl(S_M^{(i)}\bigr) \quad \forall i

\]

- Backward Induction (t=M−1, M−2, …, 1):

- Identify ITM paths if \(S_t^{(i)}\) is above (for calls) or below (for puts) the strike \(K\).

- Regress the discounted \(V_{t+1}^{(i)}\) on basis functions \(\phi_j(S_t^{(i)})\).

- Compare the fitted continuation value \(\widehat{C}_t\bigl(S_t^{(i)}\bigr)\) to \(\text{Payoff}\bigl(S_t^{(i)}\bigr)\).

- Decide whether the path exercises or continues at \(t\).

- Estimate the Option Price:

Average \(V_0^{(i)}\) (discounted if needed) across all \(i\), possibly adjusting by a control variate (see below).

4. Control Variate Technique

A control variate reduces variance by leveraging a correlated instrument with a known or easily computed price. For instance, a Black–Scholes European option can serve as the control:

\[

V_{\text{CV}} \;=\; V_{\text{LSMC}} \;+\; \beta \,\bigl( Y_0 - Y_{\text{MC}} \bigr)

\]

where

- \(Y_0\) is the closed-form BS price at \(t = 0\),

- \(Y_{\text{MC}}\) is the Monte Carlo mean of the same payoff across paths,

- \(\beta \approx \frac{\operatorname{Cov}(V, Y)}{\operatorname{Var}(Y)}\).

In practice, we store both the American payoff \(V\) and the European payoff \(Y\) across each path, then compute \(\beta\). The resulting variance reduction can be substantial.

5. Finite-Difference Greeks

We can estimate Greeks (Delta, Gamma, Vega, etc.) by repeated LSMC runs:

- Delta: \[

\Delta \;\approx\; \frac{V(S + \epsilon) - V(S - \epsilon)}{2\epsilon}

\] - Gamma: \[

\Gamma \;\approx\; \frac{V(S + \epsilon) + V(S - \epsilon) - 2\,V(S)}{\epsilon^2}

\] - Vega: \[

\text{Vega} \;\approx\; \frac{V(\sigma + \epsilon) - V(\sigma - \epsilon)}{2\epsilon}

\]

Although multiple runs are computationally expensive, it is a straightforward approach that aligns well with a modular LSMC design.

6. Key C++ Snippets (Illustrative)

Below are core snippets explaining the key LSMC steps, the complete code can be found here. We omit large code segments; the idea is to highlight the algorithmic structure.

6.1 Backward Induction Outline

The price() function showcases the core LSMC backward-induction logic:

void price() {

// 1) At t = M, set payoff

for (int i = 0; i < sims_; i++) {

V_[i] = payoff(M, i); // e.g., max(S_M[i] - K, 0) for calls

}

// 2) Backward induction: from M-1 down to 1

for (int t = M_ - 1; t >= 1; t--) {

// Collect data for regression

// dataX: S_t, dataY: discounted next-step option value

vector<double> dataX(sims_), dataY(sims_);

for(int i = 0; i < sims_; i++){

dataX[i] = paths_[t * sims_ + i];

dataY[i] = V_[i] * discount_;

}

// Regress continuation value

vector<double> contVal(sims_);

polynomialRegression(dataX, dataY, contVal);

// Compare contVal vs. immediate payoff

for (int i = 0; i < sims_; i++) {

double immediate = payoff(t, i);

if (immediate > contVal[i]) {

V_[i] = immediate; // exercise

} else {

V_[i] *= discount_; // continue

}

}

}

// 3) Estimate price at t=0

// Possibly apply control variate

double avgVal = mean(V_);

V0_ = applyControlVariate(avgVal);

}

- Terminal condition: At t=M (maturity), the option value on each path is simply the payoff.

- Backward loop: For each t=M−1, …, 1, we:

- Discount the next-step value,

- Use regression to estimate the continuation value,

- Compare it with the immediate exercise payoff.

- Final average: At t=0, we average the resulting option values across all paths. If control variate is enabled, the raw mean gets corrected.

6.2 Polynomial Regression

This helper function, polynomialRegression(), fits a polynomial to approximate \(\mathbb{E}[V_{t+1} \mid S_t]\). We use the Eigen library for matrix operations:

void polynomialRegression(const vector<double>& X,

const vector<double>& Y,

vector<double>& outCont)

{

// Build matrix A and vector b

Eigen::MatrixXd A(X.size(), n_poly_ + 1);

Eigen::VectorXd bVec(X.size());

for (int i = 0; i < (int)X.size(); i++) {

double x = X[i];

for(int p = 0; p <= n_poly_; p++){

A(i, p) = std::pow(x, p);

}

bVec(i) = Y[i];

}

// Solve A^T A coef = A^T b

auto coef = (A.transpose() * A)

.bdcSvd(Eigen::ComputeThinU | Eigen::ComputeThinV)

.solve(A.transpose() * bVec);

// Evaluate polynomial

for (int i = 0; i < (int)X.size(); i++) {

double val = 0.0;

for(int p=0; p <= n_poly_; p++){

val += coef[p] * std::pow(X[i], p);

}

outCont[i] = val;

}

}

- We construct a design matrix \(A\) with rows corresponding to paths and columns to polynomial terms \(1, x, x^2, \dots\)

- We build a right-hand-side vector \(b\) from the discounted option values.

- Solve the least squares problem \(\min \|A\beta - b\|\). The result \(\beta\) captures \(\beta_0, \beta_1, \dots, \beta_{n\_poly}\).

- Finally, we compute the continuation values for each path via the polynomial approximation.

In practice, we only do this on ITM paths or we may filter them. Various polynomial degrees can be tested for best performance vs. overfitting.

6.3 Control Variate Application

If useCV_ is true, we refine the raw LSMC estimate with a control variate adjustment:

double applyControlVariate(double rawMean) {

if (!useCV_) return rawMean;

// Suppose we have stored Y_i for the control (European payoff)

// and we have an analytic price bs0 for it

double meanY = mean(Y_);

double covVY = sampleCovariance(V_, Y_);

double varY = sampleVariance(Y_);

double b = (fabs(varY) < 1e-14) ? 0.0 : covVY / varY;

return rawMean + b * (bs0_ - meanY);

}

- Storing data: During path simulation, we also track a control payoff \(Y_i\) (e.g., the payoff of a corresponding European option).

- Closed-form: We know the analytic price \(\text{BS}_0\) (e.g., using the Black–Scholes formula).

- Covariance & Variance: We estimate \(\operatorname{Cov}(V, Y)\) and \(\operatorname{Var}(Y)\) by sample formulas: \[

\operatorname{Cov}(V, Y) \approx \frac{1}{N} \sum_{i=1}^N (V_i - \bar{V})(Y_i - \bar{Y}),\] \[\quad

\operatorname{Var}(Y) \approx \frac{1}{N} \sum_{i=1}^N (Y_i - \bar{Y})^2

\] - Control Variate Correction: \[

V_{\text{CV}} \;=\; \text{rawMean} \;+\; b \,\bigl(\text{BS}_0 - \bar{Y}\bigr), \quad

b = \frac{\operatorname{Cov}(V, Y)}{\operatorname{Var}(Y)}.

\]

The idea is that the difference \(\bar{Y} - \text{BS}_0\) (the estimation error of the control) strongly correlates with the estimation error in \(V\). By adjusting with \(b\), we can reduce variance significantly.

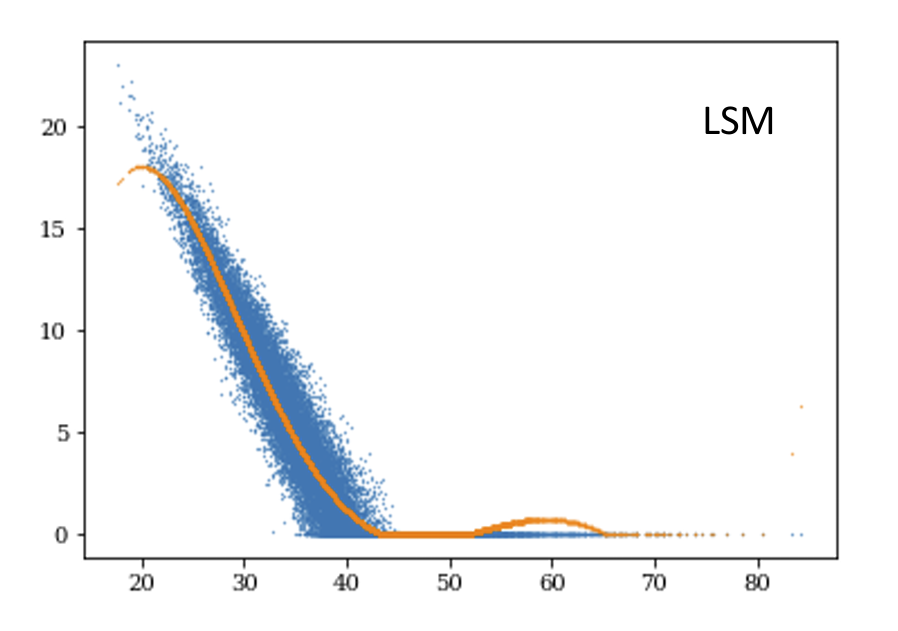

7. Numerical Behavior and Performance

- Variance Reduction: The control variate significantly tightens the confidence interval with minimal additional overhead.

- Polynomial Basis Choice: A small polynomial degree (e.g., 2 or 3) often suffices. Higher degrees can cause numerical instability unless orthogonal polynomials or carefully scaled inputs are used.

- Parallelization: The loop over paths can be parallelized with OpenMP or other frameworks. Typical performance scales well with CPU cores.

8. Conclusion

The Least Squares Monte Carlo (LSMC) method is a game-changer for pricing American options, elegantly addressing the challenge of early exercise decisions. By combining the power of Monte Carlo simulations, regression techniques, and backward induction, it offers a flexible and intuitive framework to approximate the otherwise elusive conditional expectations.

This approach shines in its ability to balance mathematical rigor with practical implementation. It seamlessly integrates variance reduction techniques like control variates, which can dramatically improve precision with minimal computational overhead. Moreover, its modular structure makes it an excellent starting point for extending into more advanced areas, such as exotic options or complex basis functions.

Whether you're a quantitative analyst exploring early-exercise features or a software engineer building robust financial models, LSMC provides the tools you need. With thoughtful design, you can adapt this framework to handle a wide range of financial products, incorporating innovations like orthogonal polynomials, neural networks, or parallelization to enhance performance.

References

- Longstaff, F. A., & Schwartz, E. S. (2001). Valuing American options by simulation: a simple least-squares approach. Review of Financial Studies, 14(1), 113–147.

- Glasserman, P. (2003). Monte Carlo Methods in Financial Engineering. Springer.